I needed to take a load of regions (queries) and find those that do not overlap annotated genomic features. I did it very badly a few times, but came up with this alternative that has reduced the cost so much that I'm now bottlenecked by fetching the regions and rendering the output.

val chromId = ... // the chromosome ID

// order by element 1 of a pair by stop

val by_1 = Ordering.by((_:(Int, Set[Long]))._1)

// we're going to build a sorted set from range start to the set of all

// range IDs that start before or at that position

val builder_1 = SortedSet.newBuilder(by_1)

var idsSoFar: Set[Long] = Set.empty

for((start, id) <- from(regions)(r =>

where(r.genomic_sequence_id === chromId)

select(r.start, r.id) orderBy(r.start)))

{

idsSoFar += id

builder_1 += (start -> idsSoFar)

}

val ranges_1 = builder_1.result()

// now we're going to build a sorted set from range end to the set of all

// range IDs that end after that position

val builder_2 = SortedSet.newBuilder(by_1)

var idsSoFar: Set[Long] = Set.empty

for((stop, id) <- from(regions)(r =>

where(r.genomic_sequence_id === chromId)

select(r.stop, r.id) orderBy(r.stop).desc))

{

idsSoFar += id

builder_2 += (stop -> idsSoFar)

}

val ranges_2 = builder_2.result()

// now we can compare a bazillion items to this to see if they overlap

for(query <- queryRegions) {

// slice the sorted set to get all starts that start before the psm stops

val startsBefore = ranges_1.rangeImpl(None, Some(query.stop -> Set.empty))

// slice the sorted set to get all ranges that stop before the psm starts

val stopsAfter = ranges_2.rangeImpl(Some(query.start -> Set.empty), None)

// if one or the other set is empty, we don't have any overlaps

val nonOverlap = if(startsBefore.isEmpty || stopsAfter.isEmpty) true

else {

val (_, lbIds) = startsBefore.lastKey // get the IDs for all

val (_, faIds) = stopsAfter.firstKey

val (smaller, larger) = if(lbIds.size < faIds.size) (lbIds, faIds) else (faIds, lbIds)

val anyShared_? = smaller exists larger

! anyShared_?

}

doSomethingUseful(query, nonOverlap)

}

To get any space efficiency, you must use a structure-sharing immutable set implementation for the sets of range IDs. That way, the total cost of all those sets of range IDs will be approximately linear on the cost of the largest set. If each is a fully independent set, the cost will be quadratic on the number of ranges, which may lead to crying or the killing of kittens.

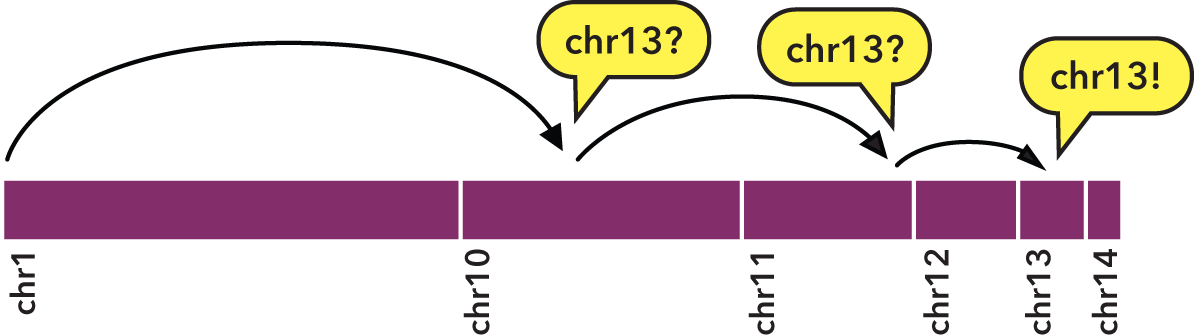

The intuition is that as a overlaps b iff a.start < b.end and b.start > a.end, we can store a pre-computed map of IDs for all a.start < x and a.end > y, and then simply perform set intersection to and these restrictions. If we're only interested in the existence or otherwise of an overlap (not the overlapping regions themselves), then we just need to see if any single item in the smaller set is in the bigger one and stop as soon as we find one.

This could be sped up further by taking advantage of a structured, sorted representation of the ID sets to get performance close to O(log(smaller) x log(larger)). However, I haven't bothered with this yet, as it's fast enough :)

I would try to differentiate between algorithms and complexity and certain implementations and profiling. An algorithm has space and time complexity which is a universally valid feature of the algo, while an implementation has run-time and memory usage, which depend on the algorithm, prog. language, machine type., CPU frequency, etc.

It's worth noting that when doing bioinformatics, I'm usually more interested in the results than the process. So the question for me isn't what's fastest, but what's easiest and fast enough.