Dear All, thank you for your time. I have a dataset that is very large (17 million x/y in a table). I am trying to find a way to represent this as a graph but considering the amount of data points I am unsure as to the best strategy. R does not complain when I try to plot a line graph, however, it fails to show any graph. The code I am using is correct as I have tested with a smaller dataset, and I checked RAM usage and whilst it goes up to around 8gb, there is still plenty free. Why does it not complain if there is an error?

The best answer that I can come up with is to basically sample but is this best or the only option?

Thank you

Dear all, many thanks for all your suggestions, I will try to answer as best I can. The reason for such a large dataset it that I am using the entire UniRef100 Database of protein sequences which contains:

kajendiran@serenity:~/Documents$ grep UniRef100_ -c uniref100.fasta

17,598,871

I am using the data contained within to calculate the Mw (molecular weight) in Kda (kilo dalton) and another value for each sequence and then plot these two against each other to represent the entire database. I realise completely that with that many data points, individual points would become difficult to determine. I just wanted to see if it was possible in R and to see what the result would look like if I sorted one axis according to the ascending order of the other and create a line graph. The way to produce a legible graph would be, as someone suggested, to segregate data within axis or to use other methods of which I was not aware.

I do not have much experience with R, thus I was curious to understand why R does not complain when I try to plot such a large amount of data but produces an empty graph. It appears it is purely the size of the dataset that is the issue, as it works perfectly with smaller, yet significant datasets. I also wanted to learn from your experience the best strategy to produce a graph in this scenario.

I agree that this could have been posted in stackoverflow, however, as someone states, it is certainly common in Bioinformatics to have to deal with obscene amounts of data in certain scenarios. This is what makes this field so exciting and yet at times also painful for cpu's, ram chips, our patience and our minds. The other reason that I posted this question here, is that I have asked a few questions this week pertaining to different facets of a larger challenge that I am trying to overcome during an internship.

Anyways, I wanted to once again thank you for your time, you have all given me alot to think about.

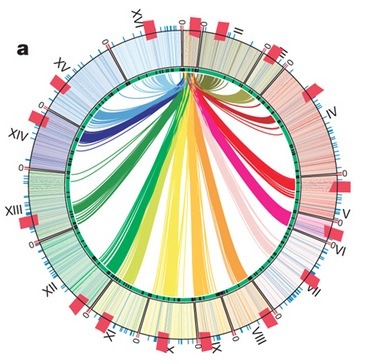

Here is just for anyone interested, the graph I produced by segregating and ordering the mw values according to the y axis:

Could you provide us the code snippet and sample input file? Also if you can provide us with any error you are getting, that will be great.

Its highly improbable to tell what the problem might be without having a look at your code.

Please indicate the relevance of this question to a bioinformatics research problem. Otherwise, it's a basic R usage question better suited to stackoverflow.com.

People doing bioinformatics research often have to examine very large data sets, thus questions on the examination of very large data sets are relevant questions to ask of people doing bioinformatics research. Of course the question would be much tastier if the poster had indicated what, exactly, they were trying to examine with 17 million data points. Perhaps people asking such questions could be asked to add a little biology to their posts, as opposed to sending them elsewhere.

Asking for the biological relevance is precisely what I did. And no-one is being "sent" anywhere; merely pointing out that when questions are phrased in terms of R usage, they belong in R usage forums.

I have a different opinion. Though phrased in terms of R usage, I think the question is completely appropriate here. Language usage questions + Context = relevance. The context of this forum is bioinformatics. The context provides unique value not supplied elsewhere. A language usage question asked here can be enlightening in a way that if asked in a language specific forum would be completely opaque (to me).

I would add that I see many questions here being asked without context of the biological problem. These end up with answers where people try to guess what has really been asked and answer based on that. Taking the time to write a question is important. Taking the time to add biological information when this is asked in the comments is also a matter of respect for the time people spend answering questions. Failing to do so is disrespectful.

I have edited the original question and added the additional information given by the poster in an answer. I think, as it stands now, the relation to biology is becoming more clear. In this case, due to the additional information given, it turned out the question could be answered in a much better way. That, I am certain, is beneficial for the whole site and the OP.

Perfect! And thanks for the edit.